Welcome to the second post in my series on Azure networking with the Azure Kubernetes Service (AKS). In the inaugural blog post, I explored several key networking configurations designed to enhance the security of workloads running in Azure Kubernetes Service (AKS). The first post has the following topics:

- Private AKS Cluster

- Application Load Balancing and Traffic Management [Ingress].

In this post, I will delve into the following essential topics and address these remaining topics, further advancing our understanding of AKS networking configurations:

- Selecting the Appropriate Networking Model

- Egress traffic

AKS Networking Model Selection:

Azure Kubernetes Service (AKS) offers three distinct networking models for managing container networking: Kubenet, CNI Overlay, and CNI.

Kubenet:

Kubenet is a basic Azure Kubernetes Service (AKS) networking option that works easily without a complicated setup. It is best for small AKS clusters with fewer than 400 nodes. This setup works well when internal or external load balancers connect to pods outside the cluster. However, it does have some limitations.

Important note: On March 31, 2028, kubenet networking for Azure Kubernetes Service (AKS) will be retired as per Microsoft/Azure. It’s recommended for any AKS clusters with Kubenet that need to be migrated or upgraded to a different networking model, such as CNI.

Container Network Interface (CNI) – Flat model:

Azure CNI is a networking model that offers advanced features. It allows pods to connect fully to the virtual network, enabling communication between pods and between pods and virtual machines (VMS). This model ensures that each pod is assigned a unique IP address from your Azure VNET and helps apply controls like NSG, ensuring pods are routable within your VNET.

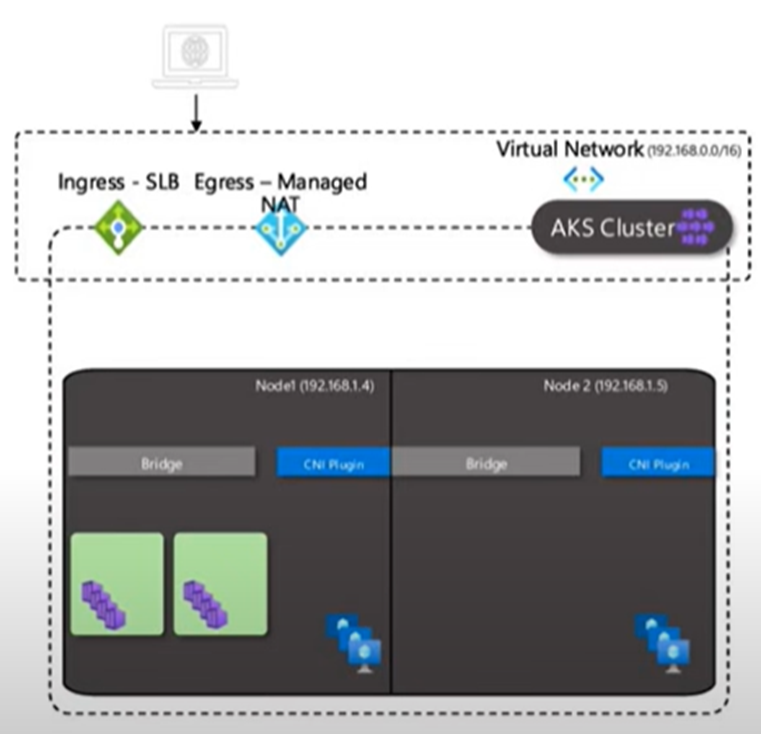

This model is useful when you need advanced Azure Kubernetes Service (AKS) features, such as virtual nodes. It works best when enough IP addresses are available and external resources must connect directly to pods. The diagram below provides a high-level overview of the CNI networking model:

This has two options:

- Azure CNI Pod Subnet

- This model assigns IP addresses to pods from a separate subnet, distinct from the subnet used by the cluster nodes.

- This is the recommended CNI plugin for flat networking scenarios in Azure Kubernetes Service (AKS).

- Azure CNI Node Subnet [Legacy].

- This model offers IP addresses to pods from the same subnet as the cluster nodes.

- A legacy flat network model CNI is generally recommended only if you need a managed virtual network (VNet) for your cluster.

CNI Overlay:

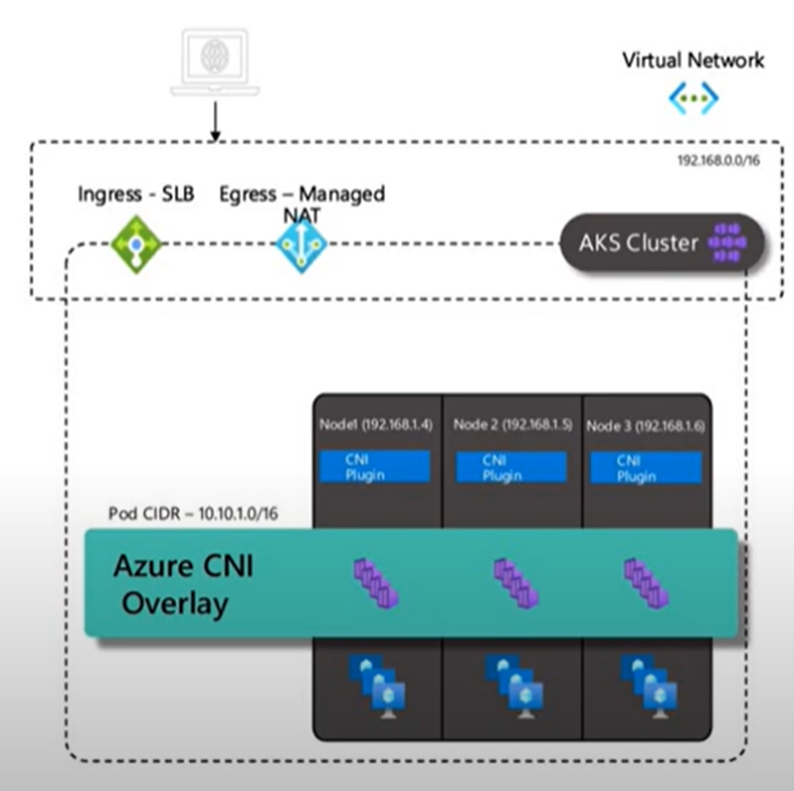

This model aims to fix the problems of the CNI model. Each pod gets an IP address from an overlay network using a technique like VXLAN. This approach separates pod IP addresses from the AKS cluster VNET’s IP range. This is helpful for large-scale deployments that have a limited amount of VNET address space.

This model is designed for environments that require the capability to scale up to 1,000 nodes, accommodating up to 250 pods per node.

Each pod is assigned a private IP address from a separate CIDR from where the AKS clusters are deployed, a virtual network subnet used for deploying AKS nodes. This architecture facilitates enhanced scalability and simplifies management when compared to traditional CNI network models.

The diagram below provides a high-level overview of the CNI Overlay networking model:

Design Best Practice: Configure Azure CNI Overlay for dynamic IP allocation for better IP utilization and to prevent IP exhaustion for AKS clusters.

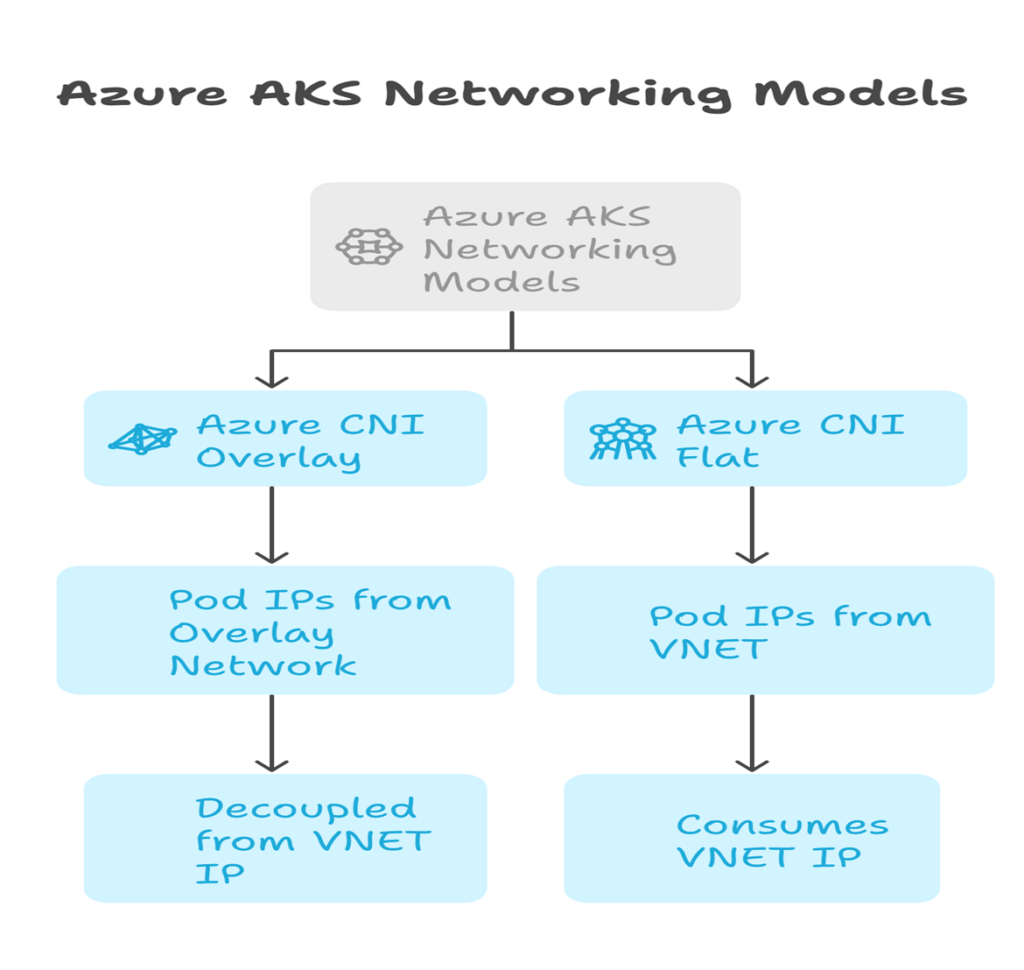

The picture below provides a high-level comparison between the Azure CNI networking model:

Egress Traffic

By default, AKS clusters have unrestricted internet access. The cluster nodes need outbound internet access on specific ports and fully qualified domain names (FQDNs) to communicate with the API Server, receive node security updates, and interact with cluster components.

Azure provides two options to restrict the egress traffic on AKS clusters:

- Azure Network isolated AKS Clusters.

- This model provides a simplified solution for restricting outbound internet access on the AKS Cluster.

- This option is an out-of-box configuration and provides two ways to restrict the outbound internet: a. private link-based AKS Cluster, and b. API server VNET integration – This service is currently in preview.

- Outbound network and FQDN rules.

- To secure outbound addresses, use a firewall device to manage outbound traffic based on domain names.

- Azure Firewall or an NVA can be configured to allow the necessary ports and addresses.

Design Best Practice: Configure Azure network-isolated AKS Clusters for better management of outbound internet traffic.

Pod Level – Egress:

In an AKS cluster, all pods can send and receive traffic by default without restrictions. To improve security, you can create rules that control this traffic. A network policy is a Kubernetes rule that sets access guidelines for pod communication. When you use network policies, you create a set of ordered rules for sending and receiving traffic. You apply these rules to groups of pods that share one or more label selectors.

Design Best Practice: Configure AKS network policy for pod-level communication.

That’s the end of the second blog post. Each configuration has unique features and benefits, providing users with flexible and scalable options for their container networking needs in Azure Kubernetes Service (AKS). This variety allows organisations to choose the most suitable model based on their requirements and use cases. Thanks for your time. Please provide feedback.

Santhosh has over 15 years of experience in the IT organization. Working as a Cloud Infrastructure Architect and has a wide range of expertise in Microsoft technologies, with a specialization in public & private cloud services for enterprise customers. My varied background includes work in cloud computing, virtualization, storage, networks, automation and DevOps.