This new series of blog posts focuses on the essential networking aspects of hosting applications and workloads within Azure Kubernetes Service (AKS). The primary goal of this series is to present the available options for selecting different networking configurations [ API access, Ingress and Egress, Azure VNET and application load balancing] in AKS. I aim to provide content that ensures your AKS journey remains scalable, secure, and efficient in terms of networking for the services and workloads hosted in your AKS clusters.

AKS Introduction

Before we discuss the options for different networking configurations, let’s review the basics of AKS.

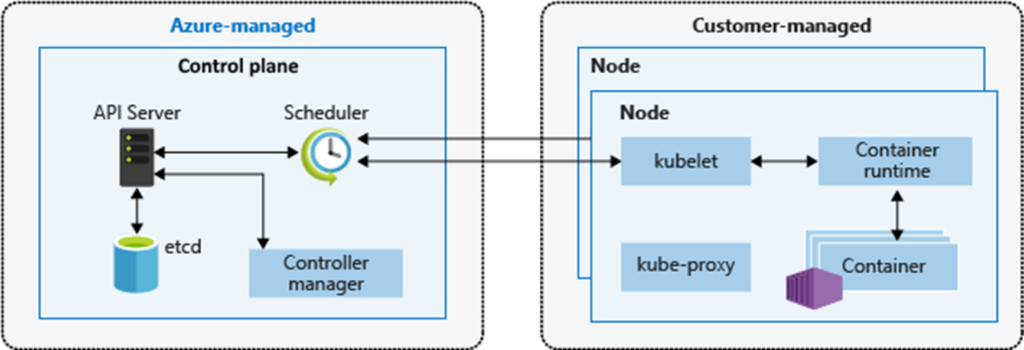

Azure Kubernetes Service (AKS) is a Platform as a Service (PaaS) offering that allows customers to run their containerized applications in the cloud without the hassle of managing the Kubernetes control plane and services. The Azure platform fully manages the control plane at no cost to the customer, and customers are only responsible for managing the worker nodes and pods. The control plane or API server is in an AKS-managed Azure resource group, and the worker or node pool is in your resource group.

To learn more about AKS, please visit the official documentation page. The picture below shows the AKS architecture in the Azure cloud:

In this blog post of the series, let’s discuss the following networking configurations, which help to secure the workloads in AKS:

- Private AKS Cluster

- Application Load balance and Traffic management [Ingress]

- Selection of the right networking model

- Egress Traffic

Private AKS Cluster

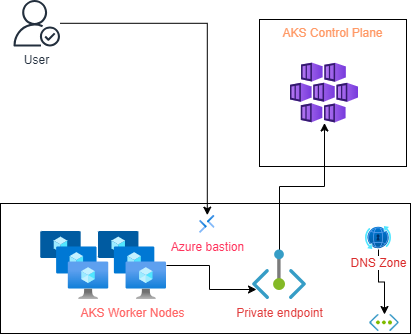

By default, in an AKS cluster, worker nodes connect to the control plane over a public endpoint within the Azure backbone. However, for some use cases, accessing the control plan on the public endpoint is invalid, and they need to keep it within their network [VNET]. The private cluster options in AKS help disable public access to control plane nodes and keep them via a private endpoint.

In AKS private cluster, the control plane [API Server] is allocated an internal private IP, and the network traffic between worker or node pools remains in the private network. This makes a cluster where master nodes are inaccessible from the public internet and the workloads run in an isolated environment. For customers looking to avoid public exposure of their resources, the Private Endpoint would be a solution. The diagram below depicts the private cluster at a high level:

The Azure Private cluster disables the public endpoint and creates a private endpoint to access the control plane of the Kubernetes engine. As a result, access to the cluster for kubectl and CD pipelines requires access to the cluster’s private endpoint. To manage the API server, you must use a VM with access to the Azure Virtual Network (VNet) hosted by the AKS Private cluster. There are several options for establishing network connectivity to the private cluster:

- Azure VM in the same VNet or peered to AKS private cluster VNET.

- VM with Hybrid connectivity access options [ExpressRoute or VPN].

- Azure Bastion with required connectivity to AKS private cluster VNET.

Important note: When creating a private AKS cluster, you must create an Azure private DNS zone and an additional public Fully Qualified Domain Name (FQDN) with a corresponding record in Azure public DNS. The agent nodes will use the record in the private DNS zone to resolve the private IP address of the private endpoint for communication with the API server.

Design Best Practice: Use Azure Private AKS when you need to restrict API Server or Control plane access with a Worker or node pool. This helps implement a Zero-Trust Network.

To learn more about Private AKS Cluster, visit my earlier blog post.

Application Load Balancing and Traffic Management

Load balancing and traffic management are crucial in Azure Kubernetes Service (AKS) to ensure application availability, scalability, and performance. AKS offers various load balancing options:

- Application Gateway for Containers (AGC).

- Application Gateway Ingress Controller (AGIC).

Application Gateway for Containers.

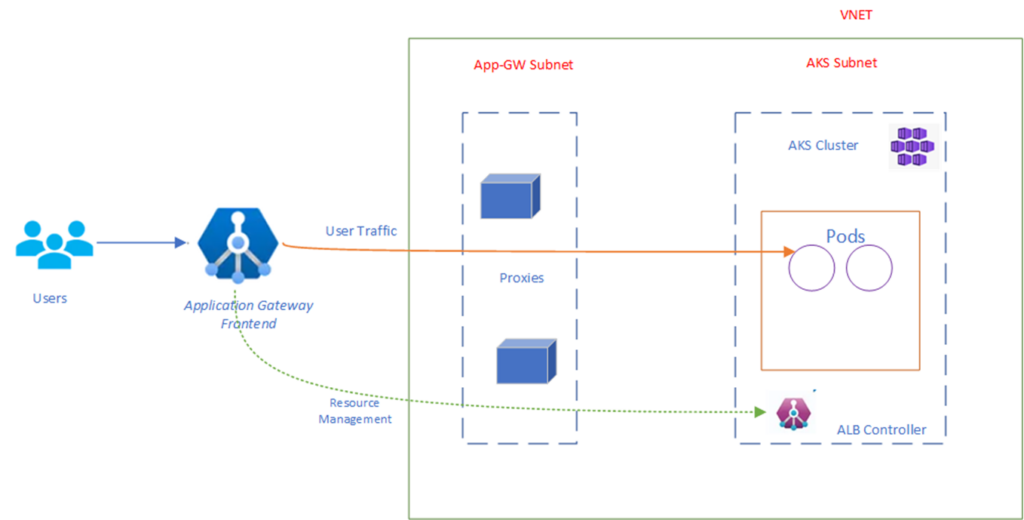

This service is one of my favourite networking services. This is a significant upgrade to the Application Gateway Ingress Controller (AGIC) and its successor. With this powerful Kubernetes application, Azure Kubernetes Service (AKS) users can harness the full potential of Azure’s native Application Gateway application load balancer.

With Azure Application Gateway for Containers, users can effectively route and distribute traffic to their containerized applications, providing load balancing and traffic management capabilities at the application layer 7. The ability to manage both Ingress and Gateway resources with Application Gateway for Containers will provide an easy transition for customers currently using AGIC.

The picture below provides the components involved in AGC:

Application Gateway Ingress Controller.

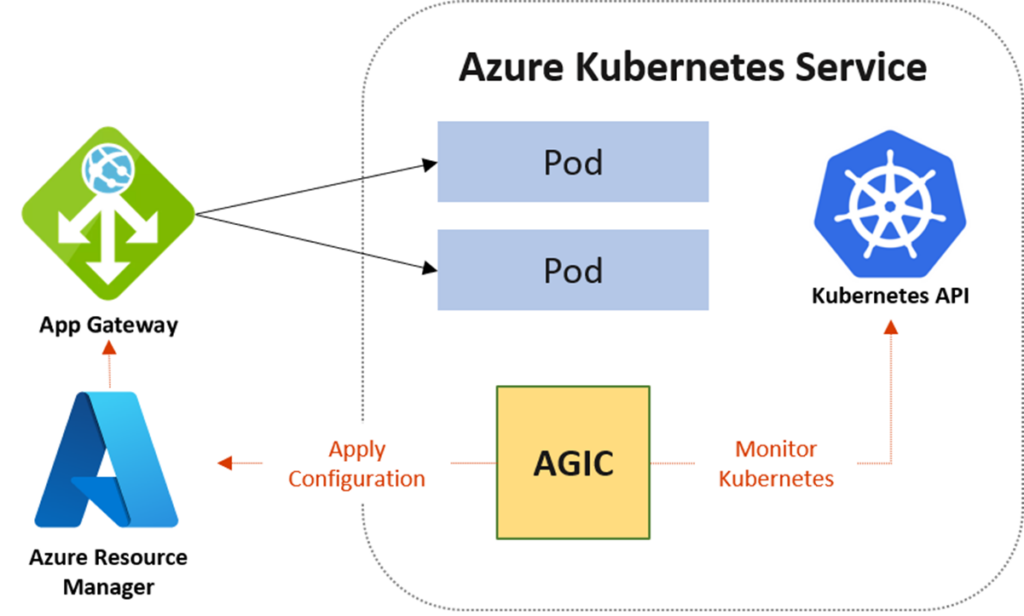

AGIC is the first Azure service to balance the application load of containerised applications. AGIC can provide advanced load balancing, SSL termination, and web application firewall (WAF) capabilities. AGIC keeps track of specific Kubernetes Resources and updates the Application Gateway’s settings accordingly through the Azure Resource Manager (ARM). One drawback is that changes may take some time to occur in a subset of Kubernetes, which applies to AGIC utilising ARM.

The picture below shows the architecture of AGIC:

The table below provides a comparison of AGC and AGIC:

| Feature | Application Gateway for Container | Application Gateway Ingress Controller |

| Support for Ingress | Y | Y |

| TLS Termination | Y | Y |

| Path-based routing | Y | Y |

| Header-based routing | Y | N |

| Query String Match | Y | N |

| End-to-End TLS | Y | Y |

| Method based routing | Y | N |

| Support for Gateway API | Y | N |

| MTLS | Y | N |

| Traffic Splitting | Y | N |

| Automatic Retries | Y | N |

Application Traffic Management

In scenarios where users request to be redirected to the nearest service endpoint based on their geographic location and to optimize application deployment across multiple regions, Azure offers services such as Azure Front Door and Azure Traffic Manager that enable seamless routing of requests to the closest region where the applications are hosted.

To enhance security while exposing applications hosted in Azure Kubernetes Service (AKS), Azure Front Door can be utilised alongside Azure Web Application Firewall and Azure Private Link services. This architectural setup allows for a more secure application exposure. Additionally, the infrastructure can incorporate a third-party NGINX ingress controller, which functions as a backend resource for Azure Front Door, effectively managing traffic to the web applications.

Design Best Practice: Use Azure cloud-native AGC for application load balancing [L7] and SSL and mTLS capabilities. The WAF functionality has yet to be added, and this service is currently lacking. The AGIC helps expose and protect a workload in Azure Kubernetes Service (AKS). Use Azure Front Door to expose applications to the internet and host them in a multi-region.

We will conclude the first part here, and the next post will cover the remaining networking sections on AKS.

Santhosh has over 15 years of experience in the IT organization. Working as a Cloud Infrastructure Architect and has a wide range of expertise in Microsoft technologies, with a specialization in public & private cloud services for enterprise customers. My varied background includes work in cloud computing, virtualization, storage, networks, automation and DevOps.